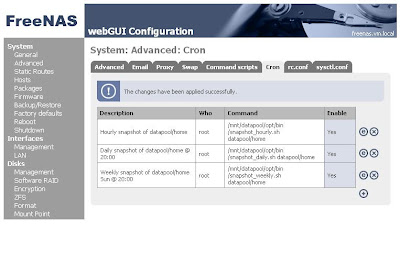

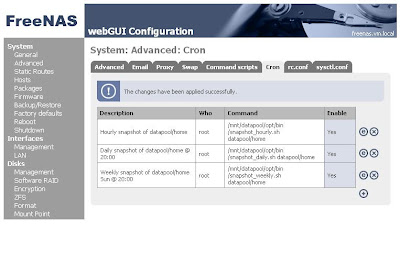

Jonas said... Im haveing issues with performance on raidz for Freenas. Right now i have four 500GB disks in a raidz1 pool.

Trying to dd 1GB file to my pool is only giving a write performance of 47MB/s. If i test one single disk with diskinfo -tv i get write 48-82MB.

Jonas said... Im haveing issues with performance on raidz for Freenas. Right now i have four 500GB disks in a raidz1 pool.

Trying to dd 1GB file to my pool is only giving a write performance of 47MB/s. If i test one single disk with diskinfo -tv i get write 48-82MB.

freenas:~# pkg_add -r net/samba-libsmbclient-3.0.28

freenas:~# pkg_add -r net/samba-nmblookup-3.0.28.tbz

freenas:~# pkg_add -r net/samba-3.0.28,1

freenas:~# pkg_add -r net/samba-libsmbclient-3.0.28

freenas:~# pkg_add -r net/samba-nmblookup-3.0.28.tbz

freenas:~# pkg_add -r net/samba-3.0.28,1

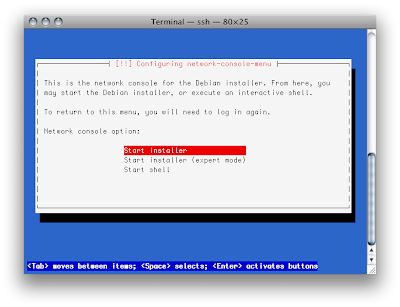

I have a QNAP TS-209 at home, but I am not really happy with it. One of my concerns is security. With the firmware I've used (2.0.1 Build 0324T) there was a massive security hole.

The file .ssh/authorized_keys will be overwritten on ach reboot, with an unknown user (admin@Richard-TS209). See authorized_keys oerwritten at reboot.

I am not sure if QNAP has fixed this issue with the new Firmware, but this showed me, that my QNAP is not the device where I've want to store my private data.

Some time ago I've read a Post that Martin Michlmayr is working on a QNAP-Port of debian. See more details abount Martin on his website and on wikipedia.

Now it is possible on an easy way to install debian on your QNAP. See here is Martins description. Read carefully!

I want to show you here my experiences with Debian on my QNAP TS-209

The first step is to create a backup of the original Firmware

cd /share/HDA_DATA/public

cat /dev/mtdblock1 > mtd1

cat /dev/mtdblock2 > mtd2

Save these both files to a USB-Stick or download them to your workstation!

Now download the required installer images

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/flash-debian

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/initrd.gz

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/kernel

Run the following script to write the kernel and the initrd.gz to flash

sh flash-debian

This will take some time...

Writing debian-installer to flash... done.

Please reboot your QNAP device.

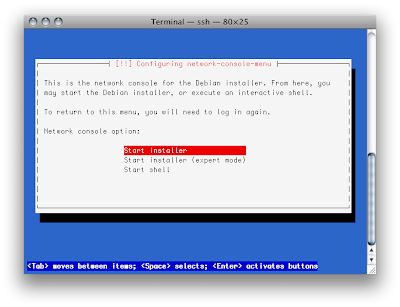

I have a QNAP TS-209 at home, but I am not really happy with it. One of my concerns is security. With the firmware I've used (2.0.1 Build 0324T) there was a massive security hole.

The file .ssh/authorized_keys will be overwritten on ach reboot, with an unknown user (admin@Richard-TS209). See authorized_keys oerwritten at reboot.

I am not sure if QNAP has fixed this issue with the new Firmware, but this showed me, that my QNAP is not the device where I've want to store my private data.

Some time ago I've read a Post that Martin Michlmayr is working on a QNAP-Port of debian. See more details abount Martin on his website and on wikipedia.

Now it is possible on an easy way to install debian on your QNAP. See here is Martins description. Read carefully!

I want to show you here my experiences with Debian on my QNAP TS-209

The first step is to create a backup of the original Firmware

cd /share/HDA_DATA/public

cat /dev/mtdblock1 > mtd1

cat /dev/mtdblock2 > mtd2

Save these both files to a USB-Stick or download them to your workstation!

Now download the required installer images

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/flash-debian

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/initrd.gz

wget http://people.debian.org/~joeyh/d-i/armel/images/daily/orion5x/netboot/qnap/ts-209/kernel

Run the following script to write the kernel and the initrd.gz to flash

sh flash-debian

This will take some time...

Writing debian-installer to flash... done.

Please reboot your QNAP device.

The Intel D945GCLF will be my new Homeserver. Currently I am using a QNAP TS-209 but it is not optimal for my requirements. As I am using often big files (videos from my HD Camera) and I have lots of photos, the QNAP is a littlebit too slow.

The Intel D945GCLF will be my new Homeserver. Currently I am using a QNAP TS-209 but it is not optimal for my requirements. As I am using often big files (videos from my HD Camera) and I have lots of photos, the QNAP is a littlebit too slow.  The Intel D945GCLF will be my new Homeserver. Currently I am using a QNAP TS-209 but it is not optimal for my requirements. As I am using often big files (videos from my HD Camera) and I have lots of photos, the QNAP is a littlebit too slow.

The Intel D945GCLF will be my new Homeserver. Currently I am using a QNAP TS-209 but it is not optimal for my requirements. As I am using often big files (videos from my HD Camera) and I have lots of photos, the QNAP is a littlebit too slow.  freenas:~# zfs list

freenas:~# zfs list freenas:~# zfs list

freenas:~# zfs list