Wednesday, December 16, 2009

Episode about the future of FreeNAS on bsdtalk

Saturday, December 5, 2009

FreeNAS needs some big modification for removing its present limitation (one of the biggest is the non support of easly users add-ons).

We think that a full-rewriting of the FreeNAS base is needed. From this idea, we will take 2 differents paths:

- Volker will create a new project called "'OpenMediaVault" based on a GNU/Linux using all its experience acquired with all its nights and week-ends spent to improve FreeNAS during the last 2 years. He still continue to work on FreeNAS (and try to share its time with this 2 projects).

- And, a great surprise: iXsystems, a company specialized in professional FreeBSD offers to take FreeNAS under their wings as an open source community driven project. This mean that they will involve their professionals FreeBSD developers to FreeNAS! Their manpower will permit to do a full-rewriting of FreeNAS.

The NEW Future of FreeNAS...

FreeNAS needs some big modification for removing its present limitation (one of the biggest is the non support of easly users add-ons).

We think that a full-rewriting of the FreeNAS base is needed. From this idea, we will take 2 differents paths:

- Volker will create a new project called "'OpenMediaVault" based on a GNU/Linux using all its experience acquired with all its nights and week-ends spent to improve FreeNAS during the last 2 years. He still continue to work on FreeNAS (and try to share its time with this 2 projects).

- And, a great surprise: iXsystems, a company specialized in professional FreeBSD offers to take FreeNAS under their wings as an open source community driven project. This mean that they will involve their professionals FreeBSD developers to FreeNAS! Their manpower will permit to do a full-rewriting of FreeNAS.

Monday, November 23, 2009

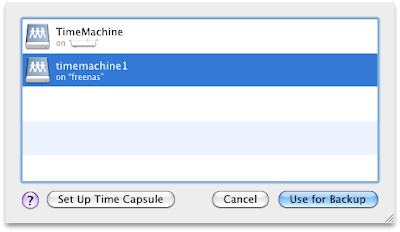

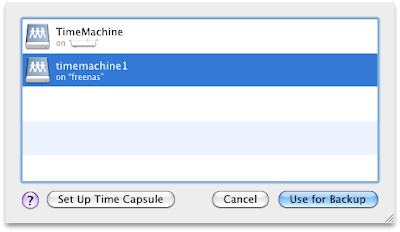

-> Enable AFP

- Automatic disk discovery - Enable automatic disk dicovery

- Automatic disk discovery mode - Time Machine

-> Select System Preferences -> Time Machine

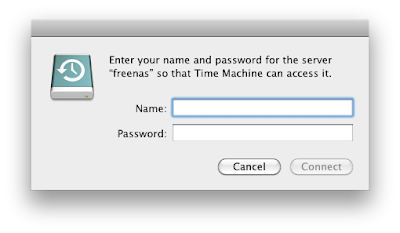

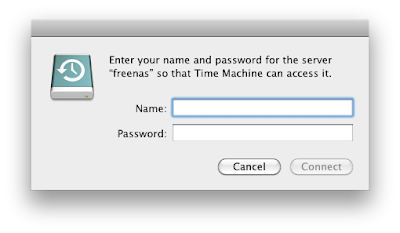

-> and Authenticate

-> and Authenticate Thats it!

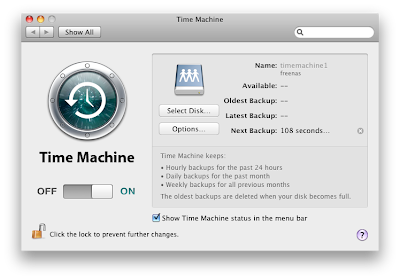

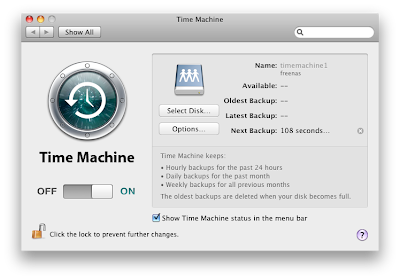

Thats it!

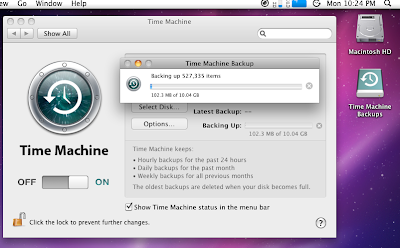

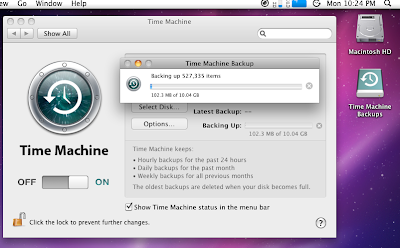

Your FreeNAS will work now similar than a TimeCapsule. Enjoy! Details can be found here...

I've combined this with ZFS. With two clients and regular backups since two months, I have here a compression (gzip) enabled volume with 238 GByte. The compression ratio (compressratio) is at 1.24x

freenas:~# zfs get compressratio data0/timemachine

NAME PROPERTY VALUE SOURCE

data0/timemachine compressratio 1.24x -

Instead of 295 GByte, only 238 GBytes are used. IMHO this is great :-)

Mac OSX Time Machine and FreeNAS 0.7

-> Enable AFP

- Automatic disk discovery - Enable automatic disk dicovery

- Automatic disk discovery mode - Time Machine

-> Select System Preferences -> Time Machine

-> and Authenticate

-> and Authenticate Thats it!

Thats it!

Your FreeNAS will work now similar than a TimeCapsule. Enjoy! Details can be found here...

I've combined this with ZFS. With two clients and regular backups since two months, I have here a compression (gzip) enabled volume with 238 GByte. The compression ratio (compressratio) is at 1.24x

freenas:~# zfs get compressratio data0/timemachine

NAME PROPERTY VALUE SOURCE

data0/timemachine compressratio 1.24x -

Instead of 295 GByte, only 238 GBytes are used. IMHO this is great :-)

Sunday, November 22, 2009

- CryptoNAS (http://cryptonas.org/) - Free

- EON (http://sites.google.com/site/eonstorage/, Blog http://eonstorage.blogspot.com/) - Free

- EuroNAS (http://euronas.de/web/index.php) - Commercial

- FreeNAS (http://www.freenas.org/) - Free

- Napp-it (http://www.napp-it.org/) - Free

- NASLite (http://www.serverelements.com/naslite.php) - Commercial

- NexentaStor (http://www.nexenta.com/) - Commercial (Developer Edition is restricted to 4TB)

- Open-E (http://www.open-e.com/) - Commercial (Lite Version is restricted to 2TB)

- OpenFiler (http://www.openfiler.com/) - Free (Commercial support is available)

- PulsarOS (http://pulsaros.digitalplayground.at/) - Free (?), not released yet

- UnRAID (http://www.lime-technology.com) - Commercial (Basic Version is restricted to 3 Drives)

Overview of NAS Operation Systems

- CryptoNAS (http://cryptonas.org/) - Free

- EON (http://sites.google.com/site/eonstorage/, Blog http://eonstorage.blogspot.com/) - Free

- EuroNAS (http://euronas.de/web/index.php) - Commercial

- FreeNAS (http://www.freenas.org/) - Free

- Napp-it (http://www.napp-it.org/) - Free

- NASLite (http://www.serverelements.com/naslite.php) - Commercial

- NexentaStor (http://www.nexenta.com/) - Commercial (Developer Edition is restricted to 4TB)

- Open-E (http://www.open-e.com/) - Commercial (Lite Version is restricted to 2TB)

- OpenFiler (http://www.openfiler.com/) - Free (Commercial support is available)

- PulsarOS (http://pulsaros.digitalplayground.at/) - Free (?), not released yet

- UnRAID (http://www.lime-technology.com) - Commercial (Basic Version is restricted to 3 Drives)

Friday, November 20, 2009

The Future of FreeNAS...

Wednesday, October 21, 2009

FreeNAS 0.7 - Samba tuning

Friday, October 9, 2009

FreeNAS 0.7, ZFS snapshots and scrubbing

Friday, July 17, 2009

M0n0wall and SixXS IPv6 - My first time...

Thursday, June 25, 2009

- CPU Intel Core2Quad Q6000 (2.4 GHz)

- 8 GB RAM

- Mainboard Intel DG965WH

- Network Card Intel Pro/1000 PT

And -> System|Advanced|sysctl.conf

hw.ata.to = 15

# ATA disk timeout vis-a-vis power-saving

kern.coredump = 0

# Disable core dump

kern.ipc.maxsockbuf = 16777216

# System tuning - Original -> 2097152

kern.ipc.nmbclusters = 32768

# System tuning

kern.ipc.somaxconn = 8192

# System tuning

kern.maxfiles = 65536

# System tuning

kern.maxfilesperproc = 32768

# System tuning

net.inet.tcp.delayed_ack = 0

# System tuning

net.inet.tcp.inflight.enable = 0

# System tuning

net.inet.tcp.path_mtu_discovery = 0

# System tuning

net.inet.tcp.recvbuf_auto = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.recvbuf_inc = 524288

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

net.inet.tcp.recvbuf_max = 16777216

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.recvspace = 65536

# System tuning

net.inet.tcp.rfc1323 = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_auto = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_inc = 16384

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_max = 16777216

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendspace = 65536

# System tuning

net.inet.udp.maxdgram = 57344

# System tuning

net.inet.udp.recvspace = 65536

# System tuning

net.local.stream.recvspace = 65536

# System tuning

net.local.stream.sendspace = 65536

# System tuning

net.inet.tcp.hostcache.expire = 1

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

- -e -- Include flush (fsync,fflush) in the timing calculations

- -i0 -- Test write/rewrite

- -i1 -- Test read/reread

- -i2 -- Test random read/write

- -+n -- Disable retests

- -r 64k -- Record or block size

- -s8g -- Size of the file to test (2x RAM of the client)

- -t2 -- number of threads

- -c -- Include close() in timing calculations

- -x -- Turn of stone-walling (I've received slightly better results with turned this off, see iozone manpage for details)

iozone -e -i0 -i1 -i2 -+n -r 64k -s8g -t2 -c -x

Iozone: Performance Test of File I/O

Version $Revision: 3.323 $

Compiled for 32 bit mode.

Build: macosx

Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins

Al Slater, Scott Rhine, Mike Wisner, Ken Goss

Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR,

Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner,

Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy,

Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root.

Run began: Tue Jun 23 15:47:17 2009

Include fsync in write timing

No retest option selected

Record Size 64 KB

File size set to 8388608 KB

Include close in write timing

Stonewall disabled

Command line used: iozone -e -i0 -i1 -i2 -+n -r 64k -s8g -t2 -c -x

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 Kbytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

Throughput test with 2 processes

Each process writes a 8388608 Kbyte file in 64 Kbyte records

Children see throughput for 2 initial writers = 79800.84 KB/sec

Parent sees throughput for 2 initial writers = 78177.66 KB/sec

Min throughput per process = 39088.91 KB/sec

Max throughput per process = 40711.93 KB/sec

Avg throughput per process = 39900.42 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 readers = 107705.98 KB/sec

Parent sees throughput for 2 readers = 107705.48 KB/sec

Min throughput per process = 53852.83 KB/sec

Max throughput per process = 53853.15 KB/sec

Avg throughput per process = 53852.99 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 random readers = 56735.84 KB/sec

Parent sees throughput for 2 random readers = 56735.20 KB/sec

Min throughput per process = 28367.65 KB/sec

Max throughput per process = 28368.19 KB/sec

Avg throughput per process = 28367.92 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 random writers = 24004.56 KB/sec

Parent sees throughput for 2 random writers = 23958.08 KB/sec

Min throughput per process = 11979.05 KB/sec

Max throughput per process = 12025.51 KB/sec Avg throughput per process = 12002.28 KB/sec

Min xfer = 8388608.00 KB

iozone test complete.

Conclusion

You can see in the results a constant bandwidth of 77 MByte/s write and 105 MByte/s read performance which is IMHO quite good for a single HDD. I am not sure about the random read write performance. I need to digg a bit deeper into this :-)

P.S.

Maybe you want to know 'Why AFP?' First of all, I use Apple Computers at home and it showed me the best throughput compared to NFS or Samba

Benchmark of FreeNAS 0.7 and a single SSD

- CPU Intel Core2Quad Q6000 (2.4 GHz)

- 8 GB RAM

- Mainboard Intel DG965WH

- Network Card Intel Pro/1000 PT

And -> System|Advanced|sysctl.conf

hw.ata.to = 15

# ATA disk timeout vis-a-vis power-saving

kern.coredump = 0

# Disable core dump

kern.ipc.maxsockbuf = 16777216

# System tuning - Original -> 2097152

kern.ipc.nmbclusters = 32768

# System tuning

kern.ipc.somaxconn = 8192

# System tuning

kern.maxfiles = 65536

# System tuning

kern.maxfilesperproc = 32768

# System tuning

net.inet.tcp.delayed_ack = 0

# System tuning

net.inet.tcp.inflight.enable = 0

# System tuning

net.inet.tcp.path_mtu_discovery = 0

# System tuning

net.inet.tcp.recvbuf_auto = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.recvbuf_inc = 524288

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

net.inet.tcp.recvbuf_max = 16777216

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.recvspace = 65536

# System tuning

net.inet.tcp.rfc1323 = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_auto = 1

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_inc = 16384

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

net.inet.tcp.sendbuf_max = 16777216

# http://acs.lbl.gov/TCP-tuning/FreeBSD.html

net.inet.tcp.sendspace = 65536

# System tuning

net.inet.udp.maxdgram = 57344

# System tuning

net.inet.udp.recvspace = 65536

# System tuning

net.local.stream.recvspace = 65536

# System tuning

net.local.stream.sendspace = 65536

# System tuning

net.inet.tcp.hostcache.expire = 1

# http://fasterdata.es.net/TCP-tuning/FreeBSD.html

- -e -- Include flush (fsync,fflush) in the timing calculations

- -i0 -- Test write/rewrite

- -i1 -- Test read/reread

- -i2 -- Test random read/write

- -+n -- Disable retests

- -r 64k -- Record or block size

- -s8g -- Size of the file to test (2x RAM of the client)

- -t2 -- number of threads

- -c -- Include close() in timing calculations

- -x -- Turn of stone-walling (I've received slightly better results with turned this off, see iozone manpage for details)

iozone -e -i0 -i1 -i2 -+n -r 64k -s8g -t2 -c -x

Iozone: Performance Test of File I/O

Version $Revision: 3.323 $

Compiled for 32 bit mode.

Build: macosx

Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins

Al Slater, Scott Rhine, Mike Wisner, Ken Goss

Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR,

Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner,

Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy,

Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root.

Run began: Tue Jun 23 15:47:17 2009

Include fsync in write timing

No retest option selected

Record Size 64 KB

File size set to 8388608 KB

Include close in write timing

Stonewall disabled

Command line used: iozone -e -i0 -i1 -i2 -+n -r 64k -s8g -t2 -c -x

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 Kbytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

Throughput test with 2 processes

Each process writes a 8388608 Kbyte file in 64 Kbyte records

Children see throughput for 2 initial writers = 79800.84 KB/sec

Parent sees throughput for 2 initial writers = 78177.66 KB/sec

Min throughput per process = 39088.91 KB/sec

Max throughput per process = 40711.93 KB/sec

Avg throughput per process = 39900.42 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 readers = 107705.98 KB/sec

Parent sees throughput for 2 readers = 107705.48 KB/sec

Min throughput per process = 53852.83 KB/sec

Max throughput per process = 53853.15 KB/sec

Avg throughput per process = 53852.99 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 random readers = 56735.84 KB/sec

Parent sees throughput for 2 random readers = 56735.20 KB/sec

Min throughput per process = 28367.65 KB/sec

Max throughput per process = 28368.19 KB/sec

Avg throughput per process = 28367.92 KB/sec

Min xfer = 8388608.00 KB

Children see throughput for 2 random writers = 24004.56 KB/sec

Parent sees throughput for 2 random writers = 23958.08 KB/sec

Min throughput per process = 11979.05 KB/sec

Max throughput per process = 12025.51 KB/sec Avg throughput per process = 12002.28 KB/sec

Min xfer = 8388608.00 KB

iozone test complete.

Conclusion

You can see in the results a constant bandwidth of 77 MByte/s write and 105 MByte/s read performance which is IMHO quite good for a single HDD. I am not sure about the random read write performance. I need to digg a bit deeper into this :-)

P.S.

Maybe you want to know 'Why AFP?' First of all, I use Apple Computers at home and it showed me the best throughput compared to NFS or Samba

Friday, June 12, 2009

I've bought a SuperTalent UltraDrive ME MLC 64GB for playing around with SSD's. So I will provide here my experiences

For the FreeNAS 0.7 ZFS tests I've installed this SSD into a PC with a Intel Core2Quad Q6000 (2.4 GHz), 8 GB RAM. Mainboard Intel DG965WH.

My first test is a quick diskinfo -ct

Here we go...

freenas:~# diskinfo -ct /dev/ad6

/dev/ad6

512 # sectorsize

64023257088 # mediasize in bytes (60G)

125045424 # mediasize in sectors

124053 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

ad:P565045-BDIX-6029019 # Disk ident.

I/O command overhead:

time to read 10MB block 0.068941 sec = 0.003 msec/sector

time to read 20480 sectors 1.169508 sec = 0.057 msec/sector

calculated command overhead = 0.054 msec/sector

Seek times:

Full stroke: 250 iter in 0.024970 sec = 0.100 msec

Half stroke: 250 iter in 0.025027 sec = 0.100 msec

Quarter stroke: 500 iter in 0.049870 sec = 0.100 msec

Short forward: 400 iter in 0.039449 sec = 0.099 msec

Short backward: 400 iter in 0.039230 sec = 0.098 msec

Seq outer: 2048 iter in 0.114818 sec = 0.056 msec

Seq inner: 2048 iter in 0.123779 sec = 0.060 msec

Transfer rates:

outside: 102400 kbytes in 0.696010 sec = 147124 kbytes/sec

middle: 102400 kbytes in 0.618375 sec = 165595 kbytes/sec

inside: 102400 kbytes in 0.623304 sec = 164286 kbytes/sec

As comparison... here is the same command with a WD20EADS 2TB

quake:~# diskinfo -ct /dev/ad4

/dev/ad4

512 # sectorsize

2000398934016 # mediasize in bytes (1.8T)

3907029168 # mediasize in sectors

3876021 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

I/O command overhead:

time to read 10MB block 0.072554 sec = 0.004 msec/sector

time to read 20480 sectors 2.515795 sec = 0.123 msec/sector

calculated command overhead = 0.119 msec/sector

Seek times:

Full stroke: 250 iter in 6.150143 sec = 24.601 msec

Half stroke: 250 iter in 4.960021 sec = 19.840 msec

Quarter stroke: 500 iter in 8.214024 sec = 16.428 msec

Short forward: 400 iter in 2.523203 sec = 6.308 msec

Short backward: 400 iter in 2.008208 sec = 5.021 msec

Seq outer: 2048 iter in 0.298850 sec = 0.146 msec

Seq inner: 2048 iter in 0.379041 sec = 0.185 msec

Transfer rates:

outside: 102400 kbytes in 1.077248 sec = 95057 kbytes/sec

middle: 102400 kbytes in 1.378671 sec = 74274 kbytes/sec

inside: 102400 kbytes in 2.267264 sec = 45165 kbytes/sec

As you can see... the seek times of the SSD are impressive!

FreeNAS 0.7 - diskinfo of a SuperTalent UltraDrive ME MLC 64GB

I've bought a SuperTalent UltraDrive ME MLC 64GB for playing around with SSD's. So I will provide here my experiences

For the FreeNAS 0.7 ZFS tests I've installed this SSD into a PC with a Intel Core2Quad Q6000 (2.4 GHz), 8 GB RAM. Mainboard Intel DG965WH.

My first test is a quick diskinfo -ct

Here we go...

freenas:~# diskinfo -ct /dev/ad6

/dev/ad6

512 # sectorsize

64023257088 # mediasize in bytes (60G)

125045424 # mediasize in sectors

124053 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

ad:P565045-BDIX-6029019 # Disk ident.

I/O command overhead:

time to read 10MB block 0.068941 sec = 0.003 msec/sector

time to read 20480 sectors 1.169508 sec = 0.057 msec/sector

calculated command overhead = 0.054 msec/sector

Seek times:

Full stroke: 250 iter in 0.024970 sec = 0.100 msec

Half stroke: 250 iter in 0.025027 sec = 0.100 msec

Quarter stroke: 500 iter in 0.049870 sec = 0.100 msec

Short forward: 400 iter in 0.039449 sec = 0.099 msec

Short backward: 400 iter in 0.039230 sec = 0.098 msec

Seq outer: 2048 iter in 0.114818 sec = 0.056 msec

Seq inner: 2048 iter in 0.123779 sec = 0.060 msec

Transfer rates:

outside: 102400 kbytes in 0.696010 sec = 147124 kbytes/sec

middle: 102400 kbytes in 0.618375 sec = 165595 kbytes/sec

inside: 102400 kbytes in 0.623304 sec = 164286 kbytes/sec

As comparison... here is the same command with a WD20EADS 2TB

quake:~# diskinfo -ct /dev/ad4

/dev/ad4

512 # sectorsize

2000398934016 # mediasize in bytes (1.8T)

3907029168 # mediasize in sectors

3876021 # Cylinders according to firmware.

16 # Heads according to firmware.

63 # Sectors according to firmware.

I/O command overhead:

time to read 10MB block 0.072554 sec = 0.004 msec/sector

time to read 20480 sectors 2.515795 sec = 0.123 msec/sector

calculated command overhead = 0.119 msec/sector

Seek times:

Full stroke: 250 iter in 6.150143 sec = 24.601 msec

Half stroke: 250 iter in 4.960021 sec = 19.840 msec

Quarter stroke: 500 iter in 8.214024 sec = 16.428 msec

Short forward: 400 iter in 2.523203 sec = 6.308 msec

Short backward: 400 iter in 2.008208 sec = 5.021 msec

Seq outer: 2048 iter in 0.298850 sec = 0.146 msec

Seq inner: 2048 iter in 0.379041 sec = 0.185 msec

Transfer rates:

outside: 102400 kbytes in 1.077248 sec = 95057 kbytes/sec

middle: 102400 kbytes in 1.378671 sec = 74274 kbytes/sec

inside: 102400 kbytes in 2.267264 sec = 45165 kbytes/sec

As you can see... the seek times of the SSD are impressive!